Non-uniform memory access (NUMA)

Non uniform acess memory :- the arrangement of the memory according to the processor influences how rapidly information is gotten to by the PC. A processor can get to its own nearby memory more rapidly than non-neighborhood memory under NUMA (memory neighborhood to one more processor or memory divided among processors). The upsides of NUMA are confined to explicit jobs, especially on servers where the information is regularly unequivocally corresponded with specific tasks or clients.

Symmetric multiprocessing (SMP) frameworks are the sensible ancestors of NUMA designs regarding scaling. Business variants of them were made during the 1990s by Unisys, Curved PC (later Hewlett-Packard), Silicon Designs (later Silicon Illustrations Global), Sequent PC Frameworks (later IBM), Information General (later EMC, presently Dell Innovations), Honeywell Data Frameworks Italy (HISI) (later Groupe Bull).

Present day computer chips execute definitely more rapidly than the essential memory they use. Early PCs and information handling frameworks normally ran more slow than their own memory. With the presentation of the primary supercomputers during the 1960s, the exhibition limits of processors and memory were crossed. From that point forward, computer processors have become to an ever increasing extent “hungry for information,” making them delay while anticipating information to come from memory (for example for Von-Neumann design based PCs, see Von Neumann bottleneck). The 1980s and 1990s saw an enormous number of supercomputer plans that focused on rapid memory access above quicker processors, empowering the PCs to deal with huge informational indexes at rates that different frameworks couldn’t coordinate.

To forestall the presentation hit that happens when numerous processors try to get to a similar memory, Non-Uniform Memory access expects to tackle this issue by giving particular memory to every computer chip. NUMA can increment execution over a solitary common memory by a component of about the quantity of processors for issues including scattered information (normal for servers and related applications) (or separate memory banks). Multi-channel memory design, which further develops memory access simultaneousness directly as the quantity of memory channels rises, is one more technique for tackling this issue.

Cache — cognizant Non uniform memory acess:-

cache- Reserve is a minuscule, staggeringly quick non-shared memory space that practically all computer processor designs utilize to exploit territory of reference while getting to memory.

Keeping up with store lucidness over shared memory includes a significant expense with NUMA. Non-store intelligent NUMA frameworks are more straightforward to plan and build, yet the normal von Neumann engineering programming approach makes them restrictively hard to program.

At the point when more than one store keeps up with a similar memory address, ccNUMA commonly utilizes between processor correspondence between reserve regulators to keep a predictable memory picture. Because of this, ccNUMA might battle to deal with various processors attempting to rapidly get to a similar memory area. By relegating processors and memory in NUMA-accommodating ways and by trying not to timetable and locking calculations that require NUMA-threatening gets to, support for NUMA in working frameworks expects to restrict the recurrence of this sort of access.

As another option, store coherency conventions like the MESIF convention really try to limit how much correspondence important to safeguard reserve coherency. An IEEE standard called Versatile Cognizant Connection point (SCI) characterizes a registry based reserve coherency convention to get around scaling issues that were available in more seasoned multiprocessor frameworks. SCI, for example, fills in as the establishment for the NumaConnect innovation.

Hardware information :-

Starting around 2011, ccNUMA frameworks are multiprocessor frameworks in light of the AMD Opteron processor, which can be carried out without outside rationale, and the Intel Itanium processor, which requires the chipset to help NUMA. Instances of ccNUMA-empowered chipsets are the SGI Shub (Super center), the Intel E8870, the HP sx2000 (utilized in the Uprightness and Superdome servers), and those found in NEC Itanium-based frameworks. Prior ccNUMA frameworks, for example, those from Silicon Designs depended on MIPS processors and the DEC Alpha 21364 (EV7) processor.

Non-Uniform access memory construction:-

The NUMA engineering is normal in multiprocessing frameworks. These frameworks incorporate different equipment assets including memory, input/yield gadgets, chipset, organizing gadgets and capacity gadgets (notwithstanding processors). Every assortment of assets is a hub. Different hubs are connected through a high velocity interconnect or transport.

Each NUMA framework contains a rational worldwide memory and I/O address space that can be gotten to by all processors in the framework. Different parts can fluctuate, despite the fact that no less than one hub should have memory, one high priority I/O assets, and one high priority processors.

In this kind of memory engineering, a processor is relegated a particular nearby memory for its own utilization, and this memory is set near the processor. The sign ways are more limited, which is the reason these processors can get to nearby memory quicker than non-neighborhood memory. Likewise, since there is no sharing of non-neighborhood memory, there is a considerable drop in delays (idleness) when numerous entrance demands come in for a similar memory area.

NUMA architecture:-

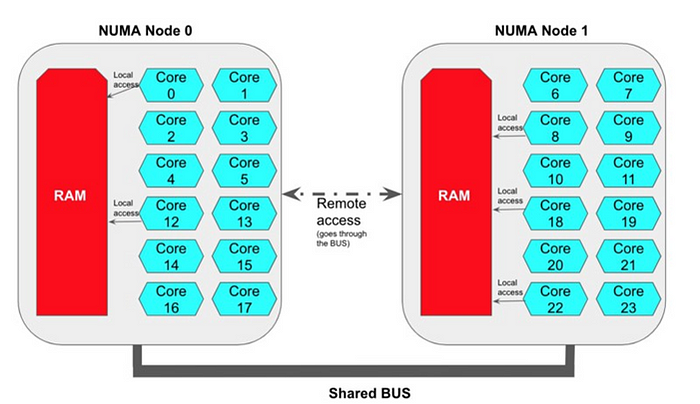

The mix of a computer chip, Slam and transport is known as a NUMA hub. Furthermore, when you interface these NUMA hubs together, you get a NON-Uniform Memory framework. In this setup, every computer chip can get to all the memory, yet the speed of memory access isn’t something similar. Getting to it’s own portion of memory is quicker than getting to the memory that is connected with another computer processor. Thus the name “NON-UNIFORM MEMORY ACCESS”.

This is an ideal design as all the computer chip’s would have the stuff that they are dealing with in their own piece of the memory, and regardless of whether in a non-ideal condition where a central processor needs to get to the memory that are further away, which would be more slow to get to; it wouldn’t be essentially as more slow as the condition which we would have in the event of an immersed transport. Presently, aside from this essential arrangement, hypothetically we could have NUMA frameworks as 2 computer processor’s associated with 1 transport and 1 measure of memory, and 2 such units associated together or even with 3 or 4 such computer processor’s associated together as displayed beneath. NUMA framework with 2 computer processor associated utilizing a solitary transport to a solitary memory. NUMA system with 4 computer chip associated utilizing a solitary transport to a memory. Yet, as a rule, NUMA design where each computer chip has devoted transport and memory is generally utilized.

One of the disadvantages of this plan is that the central processor’s entrance speed to various districts of the memory, essentially relies upon the distance between the computer chip and the memory locale it is getting to.

At the point when a central processor gets to memory in a similar NUMA hub, it is viewed as a neighborhood memory access, and this activity would have generally low dormancy.

At the point when a central processor gets to memory in another NUMA hub, it is viewed as a far off memory access, and this activity would normally have a higher dormancy, in light of how far the NUMA hub is from the computer chip’s NUMA hub. The farther it is, the higher the inactivity.

A look at the another diagram representation of it

As addressed in Figure2, this is an ordinary double space NUMA engineering where the central processor centers signified as C1-C16, where C1-C8 are important for the NUMA Space 0 and C9-C16 are essential for the NUMA Space 1 associated with their separate RAM(denoted as Smash 0 and Slam 1 for effortlessness)

The centers are associated with the separate Smash memory utilizing a Memory Regulator. On the off chance that the center is attempting to get to memory across the NUMA spaces, they do as such by the utilization of the Interconnect transport and this is named as “Remote Access” and on the off chance that they are getting to the memory inside similar NUMA area, its classified “Neighborhood Access” . ie on the off chance that C1 is attempting to get to a memory address in Smash 1, it requirements to do so by means of the Interconnect transport through MC 1 to arrive at Slam 1, which would be “Remote Access”; in the event that C1 is attempting to get to a memory address in Smash 0 through MC 0, this sounds a “Nearby Access”.

As you might envision, “Remote Access” would include an extra inactivity since the call needs to cross through an “Interconnect Transport” and this idleness is almost two times as that of a “Neighborhood Access” call.

NUMA For Linux:-

According to the equipment point of view, a NUMA framework is a PC stage that includes numerous parts or congregations every one of which might contain at least 0 central processors, nearby memory, or potentially IO transports. For quickness and to disambiguate the equipment perspective on these actual parts/gatherings from the product deliberation thereof, we’ll call the parts/congregations ‘cells’ in this archive.

Every one of the ‘cells’ might be seen as a SMP [symmetric multi-processor] subset of the framework albeit a few parts essential for an independent SMP framework may not be populated on some random cell. The cells of the NUMA framework are associated along with some kind of framework interconnect-e.g., a crossbar or highlight point interface are normal sorts of NUMA framework interconnects. Both of these sorts of interconnects can be collected to make NUMA stages with cells at different good ways from different cells.

For Linux, the NUMA foundation of interest fundamentally is known as Store Intelligent NUMA or ccNUMA frameworks. With ccNUMA frameworks, all memory is apparent to and open from any computer chip appended to any cell and store coherency is taken care of in equipment by the processor reserves and additionally the framework interconnect.

Memory access time and successful memory transmission capacity fluctuates relying upon the distance away the cell containing the central processor or IO transport making the memory access is from the cell containing the objective memory. For instance, admittance to memory by computer chips joined to a similar cell will encounter quicker access times and higher transfer speeds than gets to memory on other, far off cells. NUMA stages can have cells at different remote good ways from some random cell.

Stage merchants don’t assemble NUMA frameworks just to make programming engineers’ lives fascinating. Rather, this design is a way to give versatile memory data transfer capacity. Notwithstanding, to accomplish versatile memory data transfer capacity, framework and application programming should sort out for a greater part of the memory references [cache misses] to be to “neighborhood” memory on a similar cell, if any-or to the nearest cell with memory.

This prompts the Linux programming perspective on a NUMA framework:

Linux separates the framework’s equipment assets into different programming reflections called “hubs”. Linux maps the hubs onto the actual cells of the equipment stage, abstracting ceaselessly a portion of the subtleties for certain designs. Likewise with actual cells, programming hubs might contain at least 0 computer chips, memory and additionally IO transports. Also, once more, memory gets to memory on “closer” hubs that guide to nearer cells-will by and large experience quicker access times and higher compelling data transfer capacity than gets to additional far off cells.

For certain structures, for example, x86, Linux will “stow away” any hub addressing an actual cell that has no memory connected, and reassign any central processors joined to that cell to a hub addressing a cell that has memory. Subsequently, on these structures, one can’t expect that all computer chips that Linux partners with a given hub will see a similar nearby memory access times and transfer speed.

What’s more, for certain structures, again x86 is a model, Linux upholds the imitating of extra hubs. For NUMA imitating, linux will cut up the current hubs or the framework memory for non-NUMA stages into different hubs. Each imitated hub will deal with a negligible portion of the basic cells’ actual memory. NUMA emluation is valuable for testing NUMA piece and application highlights on non-NUMA stages, and as a kind of memory asset the board system when utilized along with cpusets. [see Documentation/cgroup-v1/cpusets.txt]

For every hub with memory, Linux builds an autonomous memory the executives subsystem, complete with its own free page records, being used page records, utilization insights and locks to intercede access. Also, Linux builds for every memory zone [one or a greater amount of DMA, DMA32, Typical, HIGH_MEMORY, MOVABLE], an arranged “zonelist”. A zonelist indicates the zones/hubs to visit when a chose zone/hub can’t fulfill the designation demand. This present circumstance, when a zone has no accessible memory to fulfill a solicitation, is classified “flood” or “contingency plan”.

Since certain hubs contain numerous zones containing various kinds of memory, Linux should choose whether to arrange the zonelists to such an extent that distributions fall back to a similar zone type on an alternate hub, or to an alternate zone type on a similar hub. This is a significant thought since certain zones, like DMA or DMA32, address somewhat scant assets. Linux picks a default Hub requested zonelist. This implies it attempts to backup to different zones from similar hub prior to utilizing far off hubs which are requested by NUMA distance.

As a matter of course, Linux will endeavor to fulfill memory distribution demands from the hub to which the computer chip that executes the solicitation is relegated. In particular, Linux will endeavor to designate from the main hub in the suitable zonelist for the hub where the solicitation starts. This is classified “nearby assignment.” If the “neighborhood” hub can’t fulfill the solicitation, the piece will analyze other hubs’ zones in the chose zonelist searching for the primary zone in the rundown that can fulfill the solicitation.

Nearby designation will generally hold ensuing admittance to the apportioned memory “neighborhood” to the basic actual assets and off the framework interconnect-as long as the errand for whose sake the part distributed some memory doesn’t later move away from that memory. The Linux scheduler knows about the NUMA geography of the stage epitomized in the “booking areas” information structures [see Documentation/scheduler/sched-domains.txt]-and the scheduler endeavors to limit task movement to far off planning spaces. In any case, the scheduler doesn’t consider an undertaking’s NUMA impression straightforwardly. Consequently, under adequate irregularity, errands can relocate between hubs, remote from their underlying hub and piece information structures.

Framework heads and application originators can limit an errand’s movement to further develop NUMA region utilizing different computer chip fondness order line interfaces, for example, taskset(1) and numactl(1), and program connection points, for example, sched_setaffinity(2). Further, one can change the portion’s default neighborhood allotment conduct utilizing Linux NUMA memory strategy. [see Documentation/administrator guide/mm/numa_memory_policy.rst.]

Framework managers can confine the computer processors and hubs’ recollections that a non-favored client can determine in the booking or NUMA orders and works utilizing control gatherings and CPUsets. [see Documentation/cgroup-v1/cpusets.txt]

On structures that don’t conceal memoryless hubs, Linux will incorporate just zones [nodes] with memory in the zonelists. This truly intends that for a memoryless hub the “neighborhood memory hub”- the hub of the main zone in computer chip’s hub’s zonelist-won’t be the actual hub. Rather, it will be the hub that the piece chose as the closest hub with memory when it fabricated the zonelists. Thus, default, nearby portions will prevail with the bit providing the nearest accessible memory. This is a result of the very component that permits such portions to backup to other close by hubs when a hub that contains memory spills over.

Some piece allotments don’t need or can’t endure this distribution backup conduct. Maybe they need to be certain they get memory from the predetermined hub or get advised that the hub has no free memory. This is normally the situation when a subsystem dispenses per central processor memory assets, for instance.

A commonplace model for making such a portion is to get the hub id of the hub to which the “current central processor” is connected utilizing one of the piece’s numa_node_id() or CPU_to_node() works and afterward demand memory from just the hub id returned. At the point when such a designation comes up short, the mentioning subsystem might return to its own backup way. The section bit memory allocator is an illustration of this. Or on the other hand, the subsystem might decide to debilitate or not to empower itself on designation disappointment. The bit profiling subsystem is an illustration of this.

In the event that the design upholds doesn’t stow away memoryless hubs, then computer chips joined to memoryless hubs would constantly cause the backup way above or a few subsystems would neglect to introduce assuming they endeavored to distributed memory solely from a hub without memory. To help such designs straightforwardly, part subsystems can utilize the numa_mem_id() or cpu_to_mem() capability to find the “neighborhood memory hub” for the calling or determined central processor. Once more, this is a similar hub from which default, nearby page distributions will be endeavored.

Let’s now have a look about what UMA(uniform access memory) is:

In UMA, where Single memory regulator is utilized. Uniform Memory Access is more slow than non-uniform Memory Access. In Uniform Memory Access, data transmission is confined or restricted as opposed to non-uniform memory access. There are 3 sorts of transports utilized in uniform Memory Access which are: Single, Different and Crossbar. It is relevant for broadly useful applications and time-sharing applications.

There are three types of UMA architectures:

One is bus based other is crossbar followed by multistage interconnection.

Uniform memory access PC structures are frequently appeared differently in relation to non-uniform memory access (NUMA) designs. In the UMA design, every processor might utilize a confidential store. Peripherals are likewise partaken in some style. The UMA model is reasonable for broadly useful and time sharing applications by different clients. It very well may be utilized to accelerate the execution of a solitary enormous program in time-basic applications.

Evolution of shared memory Multiprocessors:-

Frank Dennemann states that advanced framework structures don’t permit genuinely Uniform Memory Access (UMA), despite the fact that these frameworks are explicitly intended for that reason. Essentially talking, equal registering was to have a gathering of processors that coordinate to figure a given undertaking, consequently accelerating a generally traditional successive calculation.

As made sense of by Plain Dennemann , in the mid 1970s, “the requirement for frameworks that could support different simultaneous client tasks and unnecessary information age became standard” with the presentation of social data set frameworks. “Regardless of the great pace of uniprocessor execution, multiprocessor frameworks were better prepared to deal with this responsibility. To give a savvy framework, shared memory address space turned into the focal point of examination. Right off the bat, situation utilizing a crossbar switch were supported, but with this plan intricacy scaled alongside the increment of processors, which made the transport based framework more alluring. Processors in a transport framework can get to the whole memory space by sending demands on the transport, an extremely savvy method for involving the accessible memory as ideally as could be expected.”

Nonetheless, transport based PC frameworks accompany a bottleneck — the restricted measure of data transfer capacity that prompts versatility issues. The more central processors that are added to the framework, the less transfer speed per hub accessible. Besides, the more central processors that are added, the more drawn out the transport, and the higher the dormancy accordingly.

Most computer processors were built in a two-layered plane. Computer processors additionally needed to have coordinated memory regulators added. The basic arrangement of having four memory transports (top, base, left, right) to every central processor center permitted full accessible data transmission, yet that goes just up until this point. Computer chips deteriorated with four centers for an impressive time frame. Adding follows above and underneath permitted direct transports across to the slantingly gone against computer processors as chips became 3D. Putting a four-cored central processor on a card, which then associated with a transport, was the following coherent step.

Today, every processor contains many centers with a common on-chip store and an off-chip memory and has variable memory access costs across various pieces of the memory inside a server.

Working on the productivity of information access is one of the primary objectives of contemporary central processor plan. Every computer processor center was blessed with a little level one reserve (32 KB) and a bigger (256 KB) level 2 store. The different centers would later share a level 3 store of a few MB, the size of which has developed extensively over the long haul.

To stay away from store misses — mentioning information that isn’t in the reserve — a great deal of examination time is spent on finding the right number of central processor reserves, reserving structures, and comparing calculations.

Difference between UMA and NUMA:-

NUMA

UMA

NUMA is known as Non-uniform memory access

UMA is Uniform memory access

In Numa : Here there are multiple or different memory controller.

Uma — There is only one single memory controller

Numa- More bandwidth than our UMA

Uma — It has only limited Bandwidth

Numa — Used for time critical as well as real time Application scenario’s

Uma — Only for general purpose and time sharing applications

In NUMA we have Two types of bus:-

1. Tree

2. Hierarchical

In UMA Three type of bus :-

1. Crossbar

2. Single

3. Multiple

Example of NUMA:

Tc-2000

Example of UMA:

HP v series

Refrences:-

1

Nakul Manchanda; Karan Anand (2010–05–04). “Non-Uniform Memory Access (NUMA)” (PDF). New York University. Archived from the original (PDF) on 2013–12–28. Retrieved 2014–01–27.

2

Jonathan Corbet (2013–10–01). “NUMA scheduling progress”. LWN.net. Retrieved 2014–02–06.

4

http://www.staroceans.org/from_UMA_to_NUMA.htm

5 https://www.techtarget.com/whatis/definition/NUMA-non-uniform-memory-access